New silicon with racks: AI infrastructure announcements from Microsoft Ignite 2023

In 2022, Microsoft aimed to lead in chipset designs for the cloud, inspired by AWS, GCP, and Apple. Today, they're making strides to transform Azure into the global computer. This blog delves into pivotal AI announcements at Microsoft Ignite 2023, shaping the era of copilots.

AI

Richard Dean

11/30/20235 min read

In an era where technology is rapidly evolving, Microsoft is paving the way to become the world's digital powerhouse. Azure, currently the most formidable supercomputer in the public cloud and the third largest globally, is just the tip of the iceberg. As revealed by Satya Nadella, Microsoft's CEO, at the Microsoft Ignite 2023 conference, only a fraction of this supercomputing prowess has been made public.

Welcome to the “Age of Copilots”, a term coined during the Ignite 2023. This new era signifies a shift towards AI-centric infrastructure, designed to scale efficiently and cost-effectively, catering not only to Microsoft's needs but also to future third-party workloads.

In 2022, Microsoft's Senior Leadership team announced their ambition to become a leading provider of chipset designs, particularly for the cloud. Their vision was to build a comprehensive stack, ranging from silicon and middleware to devices and applications. This was in response to the advancements made by AWS with their Graviton server chips, GCP with their TPU, and Apple with their M series.

Fast forward to the present, and Microsoft is unmistakably progressing towards transforming Azure into the world's computer. This blog post delves into the pivotal AI infrastructure announcements from Microsoft Ignite 2023, set to propel this new age of copilots.

Unveiling the Future of AI Infrastructure

Microsoft’s Ignite 2023 was a testament to the dawn of the “Age of Copilots”. The kickoff keynote by Satya Nadella underscored the company’s commitment to becoming the world’s computer. The first major announcement was about the AI infrastructure layer, a critical component in realizing this vision.

Microsoft understands that the key to success lies in having ample, cost-effective computing power and custom silicon. After announcing Microsoft’s commitment to achieving 100% zero-carbon energy in their datacenters by 2025 and the manufacturing of their own hollow core fiber for improved datacenter speeds, Nadella introduced Azure Boost.

Azure Boost is a revolutionary system that offloads traditional hypervisor and host OS processes, such as networking, storage, and host management, onto purpose-built hardware and software. These capabilities are currently being deployed within Microsoft’s datacenters, delivering benefits to millions of customers VMs in production today and offering customers the ability to purchase Azure Boost enabled VMs of specific sizes. However, if you examine the supported sizes, these VMs are designed to be both memory-optimized and storage-optimized, offering high memory-to-core ratios ideal for relational database servers, medium to large caches, and in-memory analytics, and providing 12.5 GBps high disk throughput and 650K IOPS with low latency, perfect for applications that require fast local storage. These are high-end capabilities that not all organizations may require for their virtual machines.

Introducing Azure Cobalt and AI Accelerators

The next big reveal was Azure Cobalt, Microsoft’s first custom in-house CPU series. Cobalt, the fastest 64-bit 128 core Arm-based processor architecture offered by any cloud provider, is already powering Microsoft Teams, Azure SQL database, and other workloads. Cobalt-powered VMs will be available to customers in 2024, marking a significant milestone in Microsoft’s journey.

Nadella also highlighted Microsoft’s partnership with Nvidia and the upcoming ND H200 v5 and the NCCv5 series of confidential virtual machines. These machines, equipped with NVIDIA H100 Tensor Core GPUs, offer a secure environment for deploying GPU-powered applications, making them particularly promising for AI RAG developers. The NCCv5 series is currently in preview. On the other hand, the ND H200 v5 VM series is designed to provide customers with enhanced performance, reliability, and efficiency, making it ideal for mid-range AI training and generative AI inferencing.

The Power of AMD and the ND MI300 v5 Virtual Machines

The ND MI300 v5 virtual machines, optimized for generative AI workloads, are another exciting development. These machines, equipped with AMD chips, boast industry-leading memory, speed, and capacity. They are designed to serve more models faster with fewer GPUs, making them ideal for high range AI model training and generative inferencing, and will feature AMD’s latest GPU, the AMD Instinct MI300X. GPT4 is already running on the MI300!

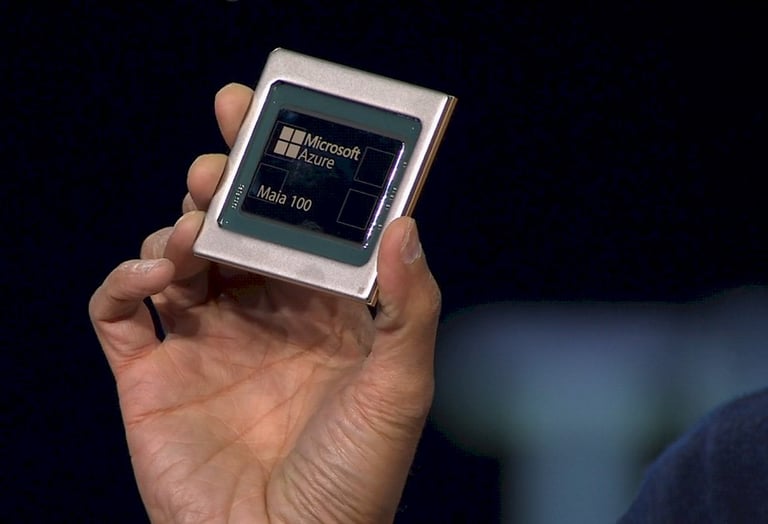

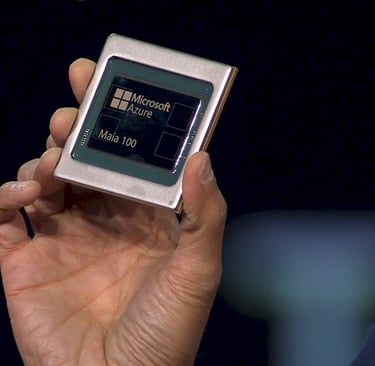

The Dawn of the Azure Maia

The final announcement was the unveiling of the Microsoft Azure Maia 100, Microsoft’s first custom in-house AI Accelerator chip. Designed to run cloud-based training and inferencing AI workloads, the Maia 100 represents a complete reimagining of the traditional server rack. It’s tailored to meet the power, cooling, and network density needs that AI workloads require from hardware. Microsoft plans to deploy Azure Maia initially for their own workloads. This chip is designed to run cloud based LLM training and inferencing AI workloads, such as those used by OpenAI models, Bing, GitHub Copilot, and ChatGPT. The Maia 100 is a technological marvel, manufactured on a 5nm process and boasting 105 billion transistors, making it one of the largest chips that can be made with current technology. However, Maia is more than just a chip. As they scale up, they will offer it to third-party workloads.

Will this Big Bet pay off?

Microsoft's Ignite 2023 conference has made it clear that the company is making a significant investment in AI, ushering in what they call the "Age of Copilots". The introduction of Azure Boost, Azure Cobalt, the ND MI300 virtual machines, and the Azure Maia are all part of this strategic move. However, the question remains: will this big bet pay off?

The technologies unveiled are undoubtedly impressive, but their success ultimately depends on whether customers are willing to pay the premiums (not yet known) and willing to accept the risks. Remember it was barely one year ago that ChatGPT hit the scene and since then we’ve had a few recent attempts from the White House, CISA, and the EU's Artificial Intelligence Act to begin setting guidelines around AI safety.

While the advent of Generative AI in 2023 has paved the way for Microsoft Copilots, it is conceivable that Copilot-related items might not dominate the IT priorities of many organizations in 2024. Typically, with novel technologies, affluent organizations and third-party vendors have the luxury of being early adopters and absorbing associated risks. However, as AI scales more efficiently, becomes more cost-effective, and gains widespread adoption, IT administrators, infrastructure specialists, security experts, and network engineers will inevitably need to acquaint themselves with these evolving technologies and advancements.

As we move forward, it will be interesting to see how these developments shape the future of AI and its infrastructure, and whether Microsoft's investment in clean power sources, new fiber and custom silicon will indeed usher in the "Age of Copilots".

If you do want to learn more, be sure to visit the Ignite News website, book of news or YouTube for on-demand content about these topics and many more.